CDC vs DW Patterns part 2

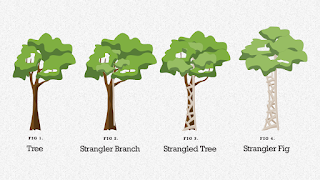

The Strangler Pattern

Strangler Pattern works for any kind of software. Either legacy or new. Either Frontend, backend, DevOps, Data, whatever. This pattern is the primary way to Slice and Dice the legacy applications(often monoliths). Some people think this pattern only works for WEB application, and that not true at all; later on in this post, I will explain some of the trade-offs and the complete spectrum of this pattern. But before we go there, let's abstract and simplify things a bit and understand how the basics work, and then after that, we can talk about challenges.

The Strangler Pattern is not really much different from a Proxy Pattern. However, the Strangler has phases(1 - Transform, 2 - Co-Exist - 3 Eliminate). Phase 1 means we need to stop the bleeding. When we introduce a proxy between the legacy code, we have a router in place. Imagine that a module or part of a legacy system has 10 features. Imagine we want to migrate all that 10 features to the new service. By having the strangler there, we allow that new features can be created on the new system. That's already a win. Secondly, all low-having fruits can be easily moved to the new codebase or even re-write.However, this is not a free lunch; killing a feature or module could take months, even years, and phase 2(co-exist) could last a long time. That's fine. There will be cases where it will be easy to isolate features or tables or pieces of code to a new service and do a clear routing between what is computed on the old system and what is computed on the new one.

Finally(phase 3), when all is migrated to the new system we can switch the source of the truth for that system and DELETE part of the old legacy code and sometimes even data.

In practice, things are a bit more complicated. You might need to keep data on the legacy system due to coupling, and thats fine if the legacy system is just READING data. As long as the legacy is just READING and the SOT(Source of truth) is your new service(assuming phase 3 is done) is completely fine to replicate data for READING porpuses. However, you want to avoid that since schema changes can be really a problem for you. Bi-DIRECTIONAL writes should be avoided as much as possible because they are complex and costly to maintain - any changes in any side(legacy or new system) break the whole thing.

Later on, all evils - migrating the schema-might not be a bad idea after once. Once you managed to isolate a part of the system and have a common interface between the consumers and the database(a.k.a, SOA Contract), you will be in a much better and easier solution to perform schema migrations refactorings.

Combining Strangler and CDC

Strangler works for Code. But what about data? Well, either we will need to do some sort of Dual Write + Forklift, or we will need to use CDC(CDC is much better). In fact, is possible to combine both together.

CDC will take care of the data for you. It's possible to make the 2 changes at the same time(Code change and Data/Schema changes); however, that might create much more coupling between old and new systems forcing you into some sort of integration system(either a 3rd system) or having a very complex CDC transformation code.Keep in mind 2 or More Sources of truth(Bi-DIRECTIONAL) that is the problem. Copy and paste data(replicate via CDC event log) in multiple places is not BAD and ok. But you can only reply to that if the schema is the same. Because, like I said, if you have a different schema, you will have another problem, so you might want to delay that for later.

Delaying the schema change for later will be good for the following reasons:

1. You are reducing the blast radius - thanks to the isolation you made.

2. You might be able to do it with tiny downtime windows, DW+Forklift, or even using CDC in isolation for the service in isolation.

3. You will reduce a lot your refactoring blast radius since just your service touching that database.

4. Having the schema change being the last thing, it does not mean your internal design would be bad that new features could not use a new design.

5. Doing things in waves reduce risk and complexity.

Even not doing schema changes - CO-Existing phase can be very long. A way to reduce that effect is to wisely choose what part of the software you migrate first. Luckily could be an optimal path where you can reduce your co-existing time. You can consider shutting down old functionality making the whole process easier. After all, do you really need all those features? With all that data? Working exactly like that?

Strangler Pattern Spectrum

High Coupling is what makes everything harder. By nature, legacy systems have poor design and high coupling, so people can easily think that it is almost important to apply this pattern. It might be the case that you dont have many low-hanging fruits, but it's possible.

Strangler can either be Transparent or Intrusive. IF both applications(Legacy and new) are WEB apps, the strangler can be an HTTP Proxy. But what if the legacy is a desktop or mainframe applications? You still should create HTTP Services for your backend, and the Strangler pattern still will be proxy, but you will likely create a driver for the legacy langue to use in the code, leading us to the intrusive part of the spectrum.For the other axis, we have Incremental and Atomic. Meaning, can we do things in parts of do we need to do it all or nothing(atomic). For worst-case scenarios will be hard to do it incremental, right? Well, ideally, incremental means used in production, but you might still be able to deploy code or even have code on the new service but not being used(might be tested by a DARK CANARY).

Engineering does that for ages. Building 2 bridges in parallel side by side. The only difference in engineering is that we need to wait for the second bridge to be 100% done to use it.The software we have more lucky(even in the high coupling of the legacy) has much more opportunities to do this in parallel and have incremental releases in prod with real users using partial-done-bridge, a.k.a new services.

I do bridges in software for ages(around 2009) as far as I remember when the world was more civilized(SOA) and we could travel and have beers(good times).

Strangler is just one way to deal with the problem. Keep in mind you have a better chance of success if you mix and match different strategies like:

* Delete Feature and data by making Discovery

* Migrate some of the data but skip some

* Buy-products that are done and dont require you to re-write your whole systems.

* Use Strangler in combination with Downtime, DW/Forklift, or ideally CDC-Log based.

* Understand your systems so you can think about the optimal strategy

* Have realistic metrics and expectations about time and releases

Strangler and CDC Event-Based are important and useful Architectural patterns. I hope these 2 blog posts was interesting and useful for you.

Take care.

Cheers,

Diego Pacheco